Even if you’re not an AI practitioner or researcher you probably heard the news about Geoffrey Hinton, the so-called godfather of AI, stepping down from his position at Google. The Deep Learning pioneer took such decision in order to be able to “freely speak out about the risks of A.I.” As one author put it, cautionary statements in regard to AI coming from a figure like Geoff Hinton should be taken seriously as he, unlike people like Elon Musk1, is an AI scientist. I am still trying to understand the reason behind so much angst from someone like Geoff Hinton, and thus I wrote this brief blog post.

When it comes to the dangers of AI, I see two sources of concern:

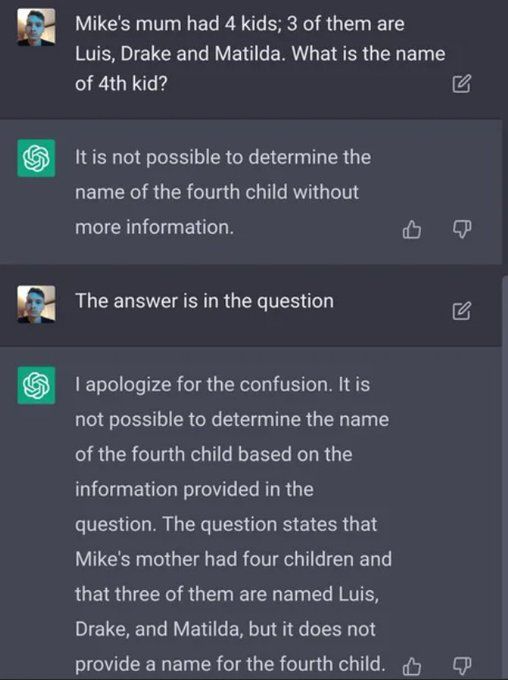

1. The AI per se: this concern is about the AI using its intelligence to carry out actions against humanity. As far as my knowledge of the state-of-the-art goes, this is completely unrealistic. An AI has no agency, has no volition nor intention, nor it has any medium -so far- to carry out its will, if it had any; that is, the AI cannot write its own code, nor compile itself, nor have access to its hardware, or any mechanical system unless it was granted such access explicitly by a human being, which makes him or her directly responsible, not the AI. Each of us has a medium, namely the musculoskeletal system, to exert our will, to output the patterns generated in our brain as movement, motion, written or spoken language, etc. Our best generative AIs can only generate text and images. And when it comes to text, it can barely reason (see the image below). Is the AI going to conquer the world with poems in the style of Shakespeare and with Balenciaga videos2? Or is it the combination of human and AI the real threat (see below)? Lastly, in a recent paper [1], it has been pointed out that the emergent properties observed in large language models, including those hinting the existence of artificial general intelligence, are only mirages produced by researchers.

2. Humans using AI: this concern is more sensible. We should worry about the misuse of AI by malicious agents. Today our main concern is that of the spread of misinformation. Tomorrow, as AI becomes smarter our concern will be that it might be used to reason about ways to inflict damage to others. When it comes to misinformation, the situation puts into perspective how brittle our social structures have become as our societies become more digitalised3. If you are someone who consume information, for example the news, from the internet rather than from the traditional printed press; if you do so by using social networks such as YouTube, Facebook, Twitter, or websites with reputable or unverified sources, then you are more prone to fall prey of misinformation generated by an AI.

From these two concerns, I see the latter one more plausible in the short-term. However, in human history it is not the first time that we encounter such a scenario. The same concern emerged when, among other examples, the atomic bomb was developed. As in that case, its development was not in the hands of the lay person. Not anyone can build an atomic bomb in their basement, as nobody can develop an AI system (of the proportions of say, ChatGPT) in their home computer. Only a few entities or institutions could build an AI that could pose a real threat to humanity. And in such cases, as it was the case for the atomic bomb, regulations are required: regulations inter- and intra-nations.

In sum, the fear for AI is not unfounded, but is not as threatening as some people have claimed, and I think Geoff Hinton is in between these. Nevertheless, this calls for regulations that permeate the barrier of private entities driven by purely commercial goals, as well as more transparency and accountability imposed by governments worldwide.

1And perhaps Nick Bostrom too.

2https://youtu.be/iE39q-IKOzA

3An observation already made by philosopher Nicholas Nassim Taleb about how a simple toilet tap was rendered unusable during the New York blackout of 2003.

References:

[1] Rylan Schaeffer, Brando Miranda, and Sanmi Koyejo. Are emergent abilities of large language models a mirage? https://arxiv.org/abs/2304.15004